Virtual <-> Actual (workshop @ KHiB, Bergen, Norway)

5 days Workshop @ the Bergen National Academy of the Arts with Melinda Sipos, 2011

Actively or passively but we all take part in the immense data flow that surrounds us, and among many aspects we may consider this as a rich source of inspiration. Information architectures, interfaces, widgets and social networks begin to take on as much importance as urban conditions, built structures, objects and our social environment. The five day long course entitled ‘From virtual to actual’ is exploring the possibilities of interpreting phenomena which occurs in our digital life into physical space and vice-versa.

Through various activities lead by the workshop tutors (discussions, presentations, research, strategies etc) workshop participants are getting an insight into the topic with an active participation and critical reaction to the topic. The goal is to develop scenarios and build prototypes/artworks that embody a kind of transformation or implementation of a virtual phenomena into a physical one or, the other way, add a virtual aspect to a physical one. The result can be both analog or digital.

The actual workflow of the workshop started with a presentation series shaping concepts for media lab culture, individual projects that are related to the field among a basic overview of the accurate technologies that can be used to access digital presence (social network graphs, simple databases, mapping). Open databases, open APIs (Application Programming Interfaces), open source software frameworks were introduced.

On the second & third day, collaborative idea generation, feedback discussions helped to find each individual participants interest in molding the boundaries between the concept of presence as a physical and virtual entity. The wide range of ideas covered diverse fields of contemporary discussions such as wireless network territories that function as spaces for confronting the public with the private, or virtual celebrities that exist as memes in the networked society.

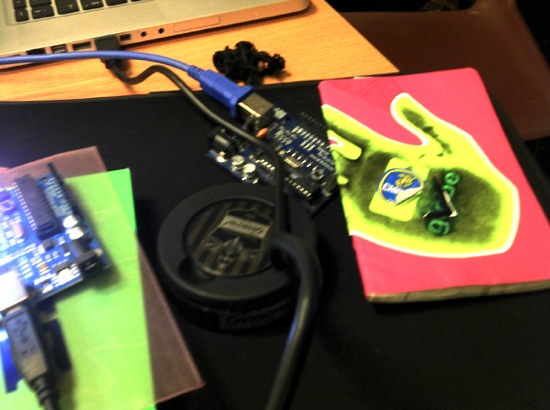

Day 4: hands on. Broken electronics, fans, arduinos were occupying the room. Several strategies were introduced: reverse engineering (to understand existing, functional technologies at their basic level), modding / hacking, critical attitude (be aware of the long-term consequences that one can do within the digital space), rapid prototyping (getting quick results, doing tests iteratively with the system before we implement it into a more solid state).

The workshop output got a bit multilayered. Some ideas remained as general concept descriptions with some visual representation (mockups, diagrams, drawings). As an interesting result a tangible celebrity meter should be mentioned: amount of “likes” of a virtual celebrity were controlling a fan that blew up a plastic bag. Thus breathing, “liveliness”, size of the physical plastic bag were controlled by single clicks made of huge amount of people (total number of likes were around 37 million… guess who that might be..?) Olav Paulsen (Egil) wrote a php script that extracts number of likes of the selected celebrity based on Facebook’s Graph API. The result was then saved to files and read by Pure Data that controlled the Arduino with the fans. As a simple, minimal visual setting, the number of likes were projected back onto the bag. Another interesting project was a kinetic, reversed animation system by Jacob Alro where “the screen is the moving part, the object remains fix” (see video below).

We were really impressed by the people and the workshop, also thanks for Brandon Labelle for the organization and coordination of this collaboration.

Permutation

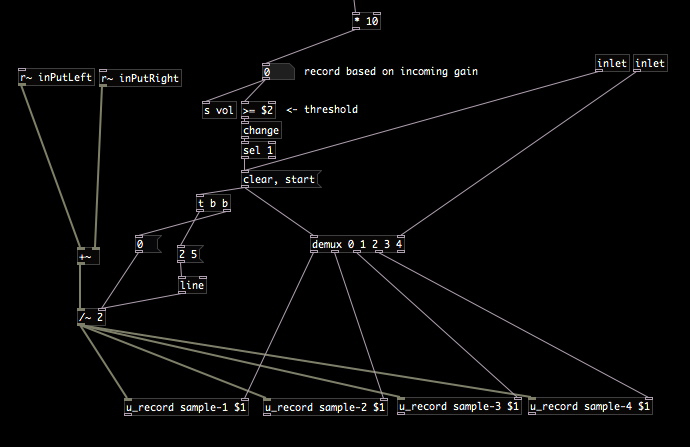

Permutation is a sound instrument developed for rotating, layering and modifying sound samples. Its structure is originally developed for a small midi controller that has a few knobs and buttons. It is then mounted on a broken piano, along several contact microphones. The basic idea of using a dead piano as an untempered, raw sound source is reflecting to the central state of this instrument in the context of western music during the past few hundred years and so. Contact mikes, prepared pianos as we know are representing a constant dialogue between classical music theory and other more recent free forms of artistic and philosophical representations. The aim of Permutation however is a bit different from this phenomenon. It is a temporal extension of gestures made on the whole resonating body of a uniquely designed sound architecture (the grand piano). In fact it is so well designed that it used to be as a simulation tool for composers to mimic a whole group of musicians. A broken, old wood structure has many identical sound deformations that it becomes an intimate space for creating strange and sudden sounds that can be rotated back and folded back onto itself.

The system is built for live performances and had been used for making music for the Artus independent theatre company. The pure data patch can be downloaded from here. Please note it had been rapidly developed to be used with a midi controller so the patch may confuse someone. The patch contains some code from the excellent rjdj library at Github.

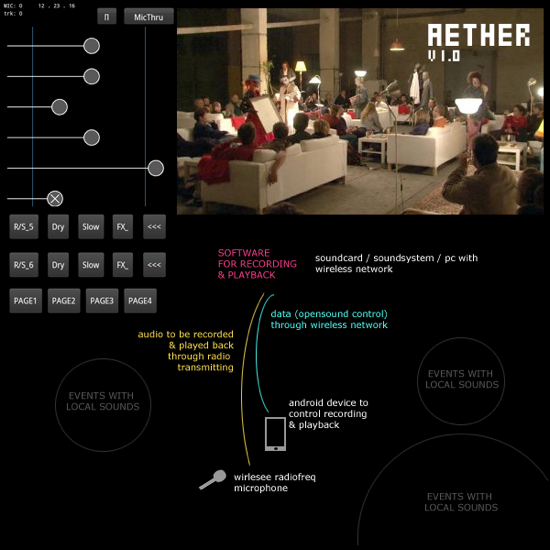

Aether

Aether is a wireless remote application developed for collecting spatially distributed sound memories. Its aim is to collect and use sound events on the fly. The client interface is shown on the left, the performance environment we are using it in can be seen on the right, a diagram of the setup is on the bottom of the image.

While walking around a larger space (that is coverable by an ordinary wireless network) one can send / receive realtime data between the client app and the sound system. All the logic, synthesis, processing is happening in the network provider server (a laptop located somewhere in space) while control, interaction and site-specific probability happens on the portable, site dependent device. The system was used for several performances of the Artus independent theatre company where action, movement had to be recorded and played back immediately.

The client app is running on an Android device that is connected to a computer running Pure Data through local wireless network (using OSC protocol). The space for interaction and control can be extended to the limits of the wireless network coverage and the maximum distance of the radio frequency microphone and its receiver. The system had been tested and used in a factory building up to a 250-300 square meters sized area.

The client code is based on an excellent collection of Processing/Android examples by ~popcode that is available to grab from Gitorious.

The source is available from here (note: the server Pd patch is not included hence its ad-hoc spaghettiness)

Collected texts

my recent research texts & publications are available to download under the creative commons by-sa 2.5 licence

Digitális hang reprezentációk (pdf) / DLA kutatás, 2011

Tértörések (pdf) / DLA kutatás, 2010

A nyílt forrás filozófiája, kulturális gyakorlata, megjelenési formái a muvészetben és a tervezésben (pdf) / DLA kutatás, 2010

Emergens Rendszerek: Természeti Folyamatok, és Mesterséges Terek Kapcsolata (pdf) / szakdolgozat, 2008

Vizuális Programozás (pdf) / szigorlati dolgozat, 2007

Zenei Vizualizációk (pdf) / szigorlati dolgozat, 2006

transform@lab

As a collaboration of several universities across Europe including Gobelins (Paris, F), University of Wales (Newport, UK) Moholy-Nagy University of Design & Arts (Budapest, H) Transformatlab aims to be a series of workshops related to cross media projects including design, emerging technologies, nonlinear narratives, gaming in our culture of the networked society. Diverse set of interaction systems were presented to workshop participants. We were introducing physical sensor capabilities of Android tablet devices, computer vision using Kinect. This example is one of the many projects that were developed during the workshop. This prototype has been developed within two days from scratch using the openkinect library wrapper for java.